What is a robots.txt file?

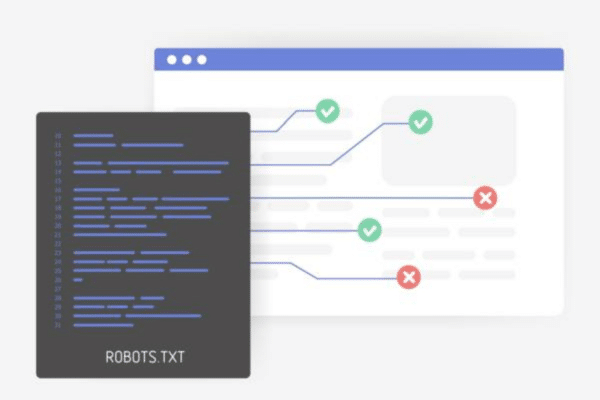

You’ve heard of this term before but don’t really get it? A robots.txt file is like the virtual bouncer guarding the entrance to your website. Its job is to inform search engine bots which areas they are allowed to visit and which ones they should avoid.

In essence, robots.txt serves as a set of instructions for search engine bots, guiding them on where they are permitted to explore on your website and where they are not welcome. You can learn more about the robots.txt file in the Crawling and Indexing section of the Google Developers documentation.

Now, why bother optimising your robots.txt file? Well, think of it like this: just as you wouldn’t invite a bull into a delicate china shop to avoid damage, you also don’t want search engines to crawl indiscriminately and index every single page of your website.

By optimising your robots.txt, you effectively communicate to search engines which parts of your site they should avoid and which areas they can access.

What should be in robots.txt?

Here’s where the real fun begins. You want your website to be indexed by search engines, but you also want to keep certain parts private. So, how do you strike that balance? Let’s break it down.

Allow the good stuff

Begin with the “Allow” directive. If you’ve got content you want people to see, allow search engines to access it. For example:

User-agent: Googlebot

Allow: /awesome-content/Disallow the junk

On the other side, there’s the “Disallow” directive. Use this to keep the search engine bots away from pages that don’t add value, like login pages or admin panels. For example:

User-agent: *

Disallow: /login/Sorry, bots, no backstage passes for you!

Rules for specific user-agents

You can create specific rules for different search engine bots. For instance:

User-agent: Bingbot

Disallow: /not-for-bing/You’re telling Bingbot, “You can’t enter here”!

Common mistakes to avoid

Remember, robots.txt can be a double-edged sword, so wield it wisely:

- Blocking all bots: Unless you want to go off the grid, don’t block all bots with a wildcard (*) unless you have a very good reason. When you block all bots, you’re essentially telling the world that your website is closed for business.

- Using irrelevant rules: Don’t create rules for sections of your website that you want search engines to index.

- Neglecting updates: Your website evolves, and so should your robots.txt file. Update it regularly to keep up with your site’s changing landscape.

Extra: Don’t forget to test your robots.txt

Run it through a tester before you unleash your newly optimised robots.txt on your live website. Google has a handy tool for this – the robots.txt tester in Google Search Console.

Best practices for robots.txt

Here are some golden nuggets of advice for best practices for robots.txt files:

Add sitemap references: Include references to your sitemaps in your robots.txt to help search engines find and crawl your content efficiently.

Check for errors: Regularly monitor your robots.txt for errors. Broken or overly restrictive rules can seriously hurt your SEO.

Stay informed: Keep up to date on search engine guidelines. Google and other search engines may change their crawling behaviour over time.

A robots.txt example

Now, let’s roll up our sleeves and get practical. Here’s a real-life example of a Robots.txt file to show you how to put these concepts into action:

User-agent: *

Disallow: /private/

Disallow: /admin/

Disallow: /temp/

User-agent: Googlebot

Allow: /blog/

Allow: /products/

Disallow: /private/

User-agent: Bingbot

Allow: /products/

Disallow: /temp/In this example, we have three sections, each targeting different user-agents – the universal wildcard (*), Googlebot, and Bingbot. This demonstrates how you can create rules for specific search engine bots while maintaining general rules for all bots.

- For all bots, the /private/, /admin/, and /temp/ directories are off-limits.

- Googlebot is given access to the /blog/ and /products/ sections, which are the public spaces of the website.

- Bingbot is allowed to crawl the /products/ section but is denied access to the /temp/ directory.

Remember, the specific rules and directory paths should be adapted to your website’s structure and content. This is just a basic example to illustrate the concept.

Tools for generating robots.txt automatically

Now, I understand that creating a robots.txt file manually can be a daunting task, especially if you’re dealing with a complex website structure. Fortunately, several tools are available to help you generate robots.txt files quickly and accurately. Below, we’ll explore the best tools for different website platforms:

Robots.txt for WordPress

- Yoast SEO plugin: This plugin is a must-have if you’re running a WordPress site. It assists with on-page SEO and includes a user-friendly feature for generating and editing your robots.txt file.

- All in One SEO pack: Another popular SEO plugin for WordPress, the All in One SEO Pack, also provides an option to generate and customise your robots.txt file. It’s an excellent choice for those who prefer an alternative to Yoast.

Robots.txt for Shopify

- Shopify SEO manager apps: Shopify offers various SEO manager apps, such as Plug in SEO and SEO Manager, that come with robots.txt generator features. These apps are specifically designed for Shopify users, making robots.txt configuration easier and more tailored to e-commerce sites.

Robots.txt for Webflow

- Webflow’s built-in tools: Webflow users have the advantage of using the platform’s native SEO settings. You can easily customise your robots.txt file within Webflow by going to the project settings and navigating to the SEO section. The feature is integrated into the platform, simplifying the process for designers and developers.

Robots.txt for Wix

- Wix SEO Wiz: Wix offers an SEO Wiz that assists users in generating a robots.txt file. It provides a step-by-step guide to optimise your website’s SEO, including creating and configuring your robots.txt file.

- Third-party SEO tools: While Wix’s native SEO features are user-friendly, you can opt for third-party SEO tools that cater to Wix websites. Tools like SiteGuru or SEMrush can help you efficiently create and manage your robots.txt file.

These tools and plugins are designed to make your life easier. They automate generating a robots.txt file and often offer user-friendly interfaces to customise rules based on your specific needs.

At UniK SEO, we consistently get the same questions about robots.txt. As a bonus, you’ll find the most frequently asked ones below. Our SEO team will try to answer them as briefly as possible.

Do all search engines follow the robots.txt rules?

Most major search engines, including Google, Bing, and Yahoo, follow Robots.txt rules. However, smaller, less popular search engines may not always obey these directives.

Can I hide sensitive information using robots.txt?

No, robots.txt is not a security measure. It’s just a guideline for search engines. If you need to secure sensitive data, use other means, such as password protection.

Is robots.txt the same as a “noindex” tag?

No, they are not the same. Robots.txt controls crawling, while a “noindex” tag on a webpage tells search engines not to index that specific page.

Still trying to figure out how to optimise your robots.txt? Reach out now for a free SEO analysis!